Why different cameras record colours differently

Tim Parkin

Amateur Photographer who plays with big cameras and film when in between digital photographs.

While we were working on the Big Camera comparison, one of the things that became quite clear was that the different sensor devices we looked at were producing images whose colour was quite different. More importantly, when we tried to fix the colour from one to look like another, it proved impossible.

This rung a few bells with me from a couple of years ago when I was looking at whether it was possible to simulate Fuji Velvia 50 by creating some form of Photoshop action or icc profile. It quickly became obvious that although we can approach some of the colour changes that Velvia introduces (for instance blue shadows, tendency to move colours toward their primaries), there were certain colour changes that were impossible to fix. Trying to change one part of the colour range would inevitably affect another part. Eventually I gave up with this, concluding there was either something magic going on or my Photoshop skills weren’t up to it.

I’ve also been looking at the colours produced by certain sensors, for instance comparing the colour out of Dav Thomas’ Sony A900 with my Canon 5Dmk2, and saw similar differences in colour that looks ‘uncorrectable’ (I’ve never been completely happy with some of the colour from the 5DMkII - preferring my old 5D by a bit and Dav’s A900 by a lot),

Obviously being a complete geek I had to work out what was happening and so started looking into colour. The very first thing that came to mind was some of my reading around inkjet printers where certain colours appeared different under different light. This effect is called ‘metamerism’ and for the interested amongst you, I’ll try to explain what it means. If you want to skip this section - head down to the ‘comparing sensors’ sections.

Metamerism

The first thing to understand about colour is that our perception of it is a compromise. The physical property of ‘colour’ is the subject of quite a bit of controversy. The actual physics of colour starts with a ‘spectral power distribution’ which tells you the proportions of the light emitted, absorbed or transmitted by an object.

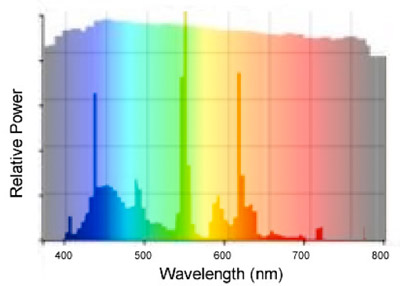

For instance, here is the spectrum of the sun compared with a D65 light bulb. Along the bottom is the frequency of the light and the ‘outline’ is the amplitude of each frequency. You’ll notice that they are completely different. However they are both the same ‘colour’ (a cool white). The spiky output in the saturated colours shows that it has a spike in the blue colour range, another in green and a final one in orangey/red.

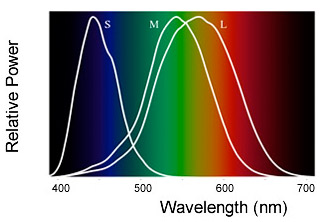

How can these be the same colour? Well it turns out that our eyes don’t detect the ‘spectrum’ of light, they have rods and cones that detect an intensity of a section of that light spectrum. The following diagram shows the sections.

This shows the L, M and S cones which combined together give you a colour response (in a similar way to the way R, G and B can create any colour).

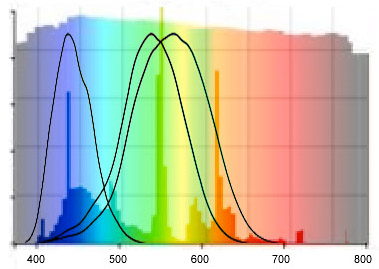

Here is how the D65 lamp manages to match the colour of sunlight. The blue spike ‘excites’ one part of the eye, the green spike ‘excites the M and L parts of the eye and the red/orange spike provides a bit of separation between the M and L parts. (in our eye we have blue cones, green cones and red cones)

Hopefully this introduces how the eye works without getting too geeky. Lets take a look at how a digital sensor sees light spectra.

Digital Sensors

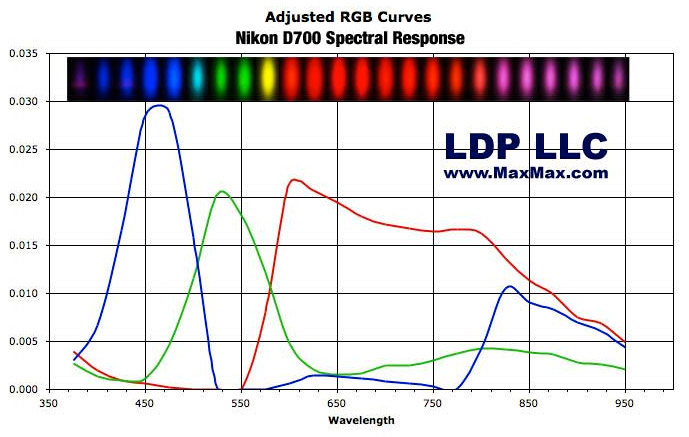

Digital sensors work in a similar way to the eye in that they have three colours. However the colour ‘spectra’ are different than the cones/rods in the eye. Here is a sensor sensitivity spectrum for the Nikon D700 (this image is from the Max Max website - a company that customises digital camera by removing anti alias filters or even the whole bayer array in order to create a true black and white digital camera).

The different coloured curves show the colour response of the red green and blue filters in the bayer array.

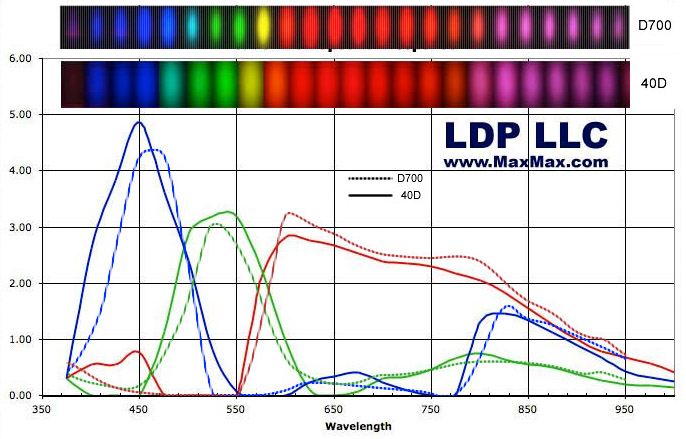

Different cameras can have very different colour sensitivity though, for instance here is a comparison between the D700 shown above and the Canon 40D (again - sourced from Max Max -

We can see from this comparison that the two cameras are producing different colours. The Canon is producing greens that are more yellowy than the Nikon and reds that are more magentary too.

Now this shows that two sensors can have different colour responses. However the argument put forward by many people is that we can just calibrate our sensors, using ICC profiles perhaps, and correct these colour issues. This *may* be correct but is not necessarily so - the following section tries to explain why.

Metameric Failure

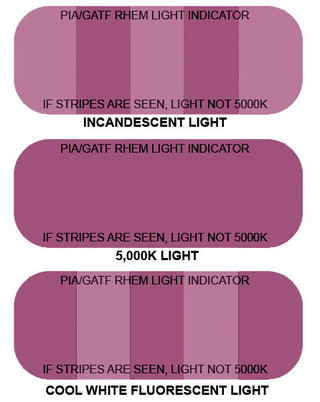

Some of you may have seen the magenta stickers called, RHEM indicators, that look a solid colour under daylight but look striped with different tones under ‘unbalanced’ light.

And here I propose a little experiment; imagine that we take a picture of one of these stickers and it shows two different tones of magenta (I have done this with my Canon 5DMkII in daylight and shown this happens), what colour correction can we make to adjust them both back to the same colour? correct one strip and you affect the other stripe. There is no way to fix because it is impossible to know which magenta stripe was the right colour in the first place.

This form of ‘metameric failure’ is why you may sometimes try come clothes on in a shop and then find out that they look different at home. However, in that case it’s light source that changes but for our digital cameras and film cameras, it’s the sensor that changes. We can imagine that instead of the Canon 5DMkII, we use a different camera and photograph the RHEM sticker in daylight and get a solid magenta colour.

Fortunately for us, the ISO organisation (who create various standards and specification for pretty much everything) have a way of measuring metameric failure in sensors and fortunately for us, it’s used by DxO Mark in their measurements of camera sensors so lets take a look at some of the figures. The number give is an abstract percentage value where 100% is perfect and probably unattainable and where 50% is the metameric error introduced by a difference between daylight and fluorescent lighting. Essentially a score of 90% is very very good and scores close to 50% are getting on for mobile phone quality.We've compiled a small sample of the DxO Mark metamerism results here (I would link to these but they aren't compiled in a single place)

| Sony A900 | 87 |

| Sony NEX7 | 85 |

| Canon 5D | 84 |

| Nikon D5000 | 83 |

| Nikon D700 | 83 |

| Samsung GX20 | 82 |

| Nikon D90 | 82 |

| Panasonic G3 | 81 |

| Panasonic GX1 | 81 |

| Nikon V1 | 81 |

| Phase IQ180 | 80 |

| Phase P40 | 80 |

| Canon 5dMkII | 80 |

| Olympus E5 | 80 |

| Panasonic GH1 | 79 |

| Nikon D3S | 79 |

| Nikon D3X | 79 |

| Hassleblad H50 | 78 |

| Canon 7D | 78 |

| Panasonic GH2 | 77 |

| Fuji X100 | 77 |

| Phase P65+ | 76 |

| Fuji X10 | 76 |

| Leica M9 | 76 |

| Canon G11 | 76 |

| Panasonic LX5 | 75 |

| Hassleblad H39 | 75 |

| Aptus Leaf | 75 |

| Samsung EX1 | 74 |

| Phase P45 | 72 |

The table reflects some of the experiences I have had with colour, starting with the difference between my 5Dmk2 and Dav Thomas’s Sony A900. I’ve noticed that the Canon 5Dmk2 has less accurate colour than the 5D and in the recent tests we noticed that the Nikon D3X had better colour than the 5DMkII. The final item that really showed up the difference was between the Sony A900 and the Phase P45+. To find out that these two cameras were the best and the worst in this list reinforced my belief that it is a metamerism problem that I’ve been seeing.

It also highlights that it isn’t necessarily the sensor that is causing the problem as the Nikon D3X and Sony A900 both have the same sensor and yet score differently. I am presuming this is to do with the dyes used in the bayer colour filters. The Nikon D3X has a lot better low light capability so I’m guessing it uses more transparenct filters (think about how much a dense red filter needs in exposure compensation - making the filter less strong would let in more light but possibly make the colour less accurate?). NB after reading around on the web it turns out that Iliah Borg has checked the filters on the Sony and confirms my suspicions and in many tests, the Sony has outperformed nearly all other cameras (including the Sony A850) for colour accuracy.

I should add here that a low score on this table does not mean that the photographs produced will look bad, the distortion could be pleasing - for example Velvia would probably come out scoring quite low but some would say the distortion looks better than reality. However, it is most likely that the score does reflect a problem rather than an advantage.

Conclusion

I hope that the above discussion has not been too geeky as I think the conclusions drawn from it are very important for landscape photography. These are simply that it may not be possible to take the raw file from a digital camera and, using icc profiles or Photoshop curves, adjust it to look just like reality. There are many materials and conditions where the results from a digital camera (or film camera for that matter) do not match up with real life and unfortunately landscape photography is one of those areas where colour changes are probably more prominent than other genres due to the colours of nature, such as chlorophyll, being quite sensitive to metameric failure i.e. the interaction with chlorophyll spectra and sensor sensitivity might not generate the right green even though all other colours look correct.

This leads into one of our future tests where we take a look at a set of cameras to assess just how good there colour response is, how far you can 'fix' the colour using profiling, and how aesthetically pleasing the results are. We hope to test a range of top end DSLRs, high end compacts and perhaps a couple of low end medium format backs.